Top 10 Productive Use Cases of ChatGPT for the Year 2023

ChatGPT is an OpenAI system developed by the company called OpenAI to improve the conventional capabilities of AI systems. The ChatGPT was specifically developed for the use of digital assistants and chatbots. Compared to other chatbots ChatGPT can be able to pick what has already been said in the conversation and it corrects itself if it makes any mistake. In this article, we have explained the top ten productive use cases of ChatGPT for the year 2023. Read to know more about ChatGPt Use Cases for 2023.

- It Answers Questions

Unlike any other chatbot, ChatGPT can answer questions in a classy way. And ChatGPT is capable of explaining complex issues in different ways or tonalities of speaking.

- It Develops APPS

Some users on Twitter asked ChatGPT for help in creating an app, and guess what! it actually worked. The AI tool even gave an example of code that can be used for a particular scenario. And in addition to that, it also gives general tips for App development; nevertheless, it must not be adopted without any personal correction or review.

- It Acts as An Alternative to Google Search

ChatGPT is not just a competitor of other chatbots but it has the potential to replace google search. This is because it has smart answers to the queries that users search for answers to. But the only drawback is that it cannot give the source references.

- It Can Compose Emails

ChatGPT can compose emails. Some Twitter users asked ChatGPT to compose readymade emails which received composed emails as a result. This probably puts an end to the blank page’s era.

- It Creates Recipes

Depending on the request received, ChatGPT can also show suggestions on the recipes for cooking. Unfortunately, at this point, we cannot judge to what extent these are successful. But it would be interesting to try the resulting recipes for yourself.

- Writing Funny Dialogue

ChatGPT also convinces users with its artistic skills. Some users had fun with the funny dialogues that the AI chatbot generated. It also writes skits and the results are impressive and great fun to read.

- Language Modeling

ChatGPT can be used to train other models, such as named entity recognition and part-of-speech tagging. This can be beneficial for businesses that require the extraction of meaning from text data.

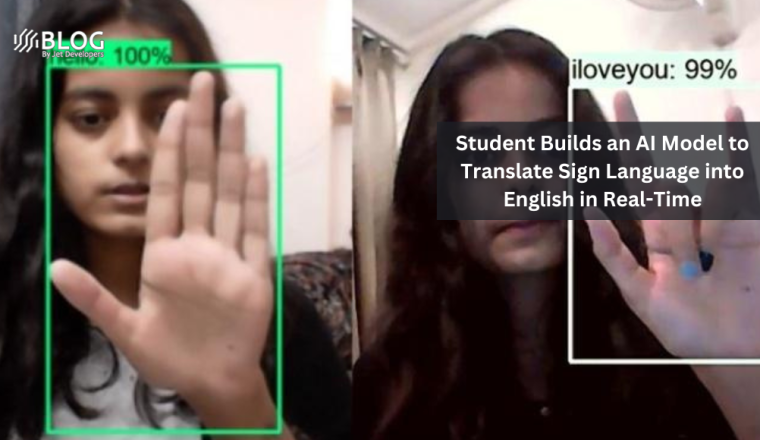

- Converts Text to Speech

ChatGPT can convert text to speech, allowing it to be used in a wide range of applications such as voice assistants, automated customer service, and more.

- Text Classification

ChatGPT can be used to categorise text, such as spam or not spam, or positive or negative sentiment. Businesses that need to filter or organise large amounts of text data may find this useful. Apart from that, ChatGPT can also translate text from one language to another using. This can be useful for businesses that need to communicate in multiple languages with customers or partners.

- Sentiment Analysis

ChatGPT can analyze text sentiment to determine whether it is positive, negative, or neutral. Businesses that need to monitor customer sentiment or social media mentions may find this useful. Apart from that the ChatGPT also be used to summarize lengthy texts, making them easier to understand and digest. This applies to news articles, legal documents, and other types of content.