Microsoft’s Semantic Kernel team has unveiled its plans for the fall 2023 release of Semantic Kernel, an open source SDK designed to integrate large language models (LLMs) with traditional programming languages. These plans include the addition of several new capabilities such as plugin testing, dynamic planners, and streaming.

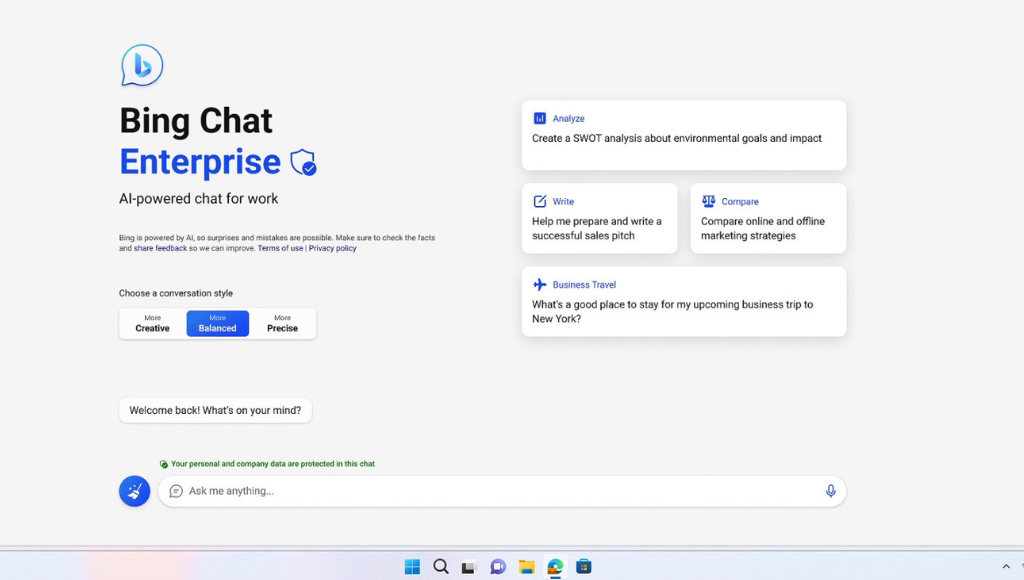

As part of their efforts, the Semantic Kernel team has adopted the OpenAI plugin standard, enabling plugins to work seamlessly across OpenAI, Semantic Kernel, and the Microsoft platform. This move aims to promote compatibility and interoperability between these platforms.

The team is also focused on enhancing the planners within Semantic Kernel to enable them to handle deployments on a global scale. Planners are responsible for orchestrating the necessary steps to fulfill user requests or “asks.” Users can look forward to features like cold storage plans for consistency and dynamic planners that automatically discover and utilize plugins.

In line with their roadmap for the fall, the team is working on integrating Semantic Kernel with various vector databases, including Pinecone, Redis, Weaviate, Chroma, as well as Azure Cognitive Search and Services. They are also developing a document chunking service and improving the Semantic Kernel Tools extension for Visual Studio Code.

Telemetry and AI safety are key considerations in the plan. The inclusion of end-to-end telemetry provides developers with insights into goal-oriented AI plan creation, token usage, and error tracking. Additionally, the integration of Azure Content Safety hooks ensures a streamlined approach to maintaining AI safety.

For developers interested in Semantic Kernel, the project’s repository is available on GitHub. Moreover, Microsoft recently announced enhancements to endpoint management in the Semantic Kernel Tools extension for Visual Studio Code on July 12. These improvements enable developers to switch between different AI models more quickly and easily, enhancing their workflow and productivity.