Merlyn Mind, an AI-powered digital assistant platform, has introduced a suite of large language models (LLMs) specifically designed for the education sector. These LLMs, available under an open-source license, aim to enhance the learning experience for teachers and students by incorporating user-selected curricula and prioritizing safety requirements.

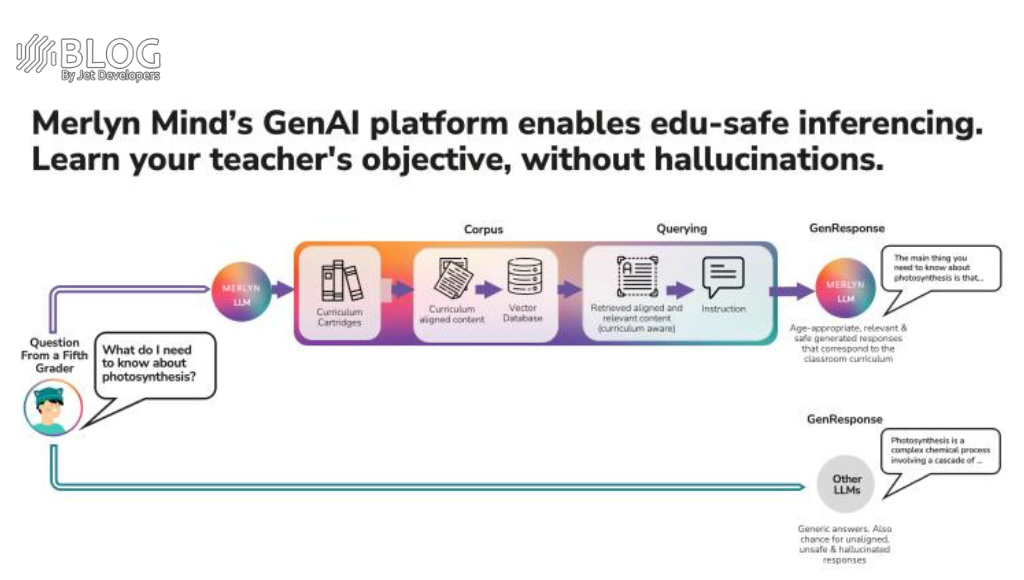

Unlike generic LLMs used in some education services, which may produce inaccurate or unreliable responses, Merlyn’s education-focused LLMs are tailored to meet the specific needs of educational workflows. By relying solely on academic corpora chosen by users or institutions, Merlyn ensures that the generated responses align with educational requirements and minimize potential drawbacks like hallucinations and privacy complexities.

Through Merlyn’s voice assistant, teachers and students can interact with the generative AI platform in various ways. They can ask questions, request the generation of quizzes and classroom activities based on ongoing conversations, and even create custom content such as slides, lesson plans, and assessments aligned with their curriculum.

To provide accurate educational insights and minimize inaccuracies, Merlyn’s LLMs retrieve the most relevant passages from the content used for teaching. Responses generated by the LLMs are solely based on this content, without drawing from pretraining materials. Each response undergoes an additional verification step by an alternate language model to ensure alignment with the original request.

Merlyn Mind prioritizes privacy and compliance, adhering to legal, regulatory, and ethical requirements specific to educational environments. The company guarantees the protection of personal information and follows privacy standards like FERPA, COPPA, GDPR, and relevant student data privacy laws. Merlyn screens and deletes personally identifiable information and retains only de-identified data derived from text transcripts to improve services and other lawful purposes.

Compared to generalist models, Merlyn’s education-focused LLMs are smaller, more efficient, and demonstrate high efficiency in training and operation. The models range from six billion to 40 billion parameters, while mainstream general-purpose models typically exceed 175 billion parameters. Merlyn’s LLMs exhibit lower latency, resulting in faster response times and more efficient utilization of computing resources.

Satya Nitta, CEO and cofounder of Merlyn Mind, emphasized the transformative potential of generative AI in education when used safely and accurately. Merlyn encourages the developer community to download the models and use them to verify the safety of their LLM responses. In addition to the voice assistant, Merlyn is available in a chatbot interface and plans to make the platform accessible through an API. Merlyn also intends to contribute some of its education LLMs to open-source initiatives.

By purposefully developing AI technologies that align with domain-specific workflows and needs, Merlyn Mind envisions generative AI transforming the education industry, boosting productivity, and enabling individuals to reach their full potential.