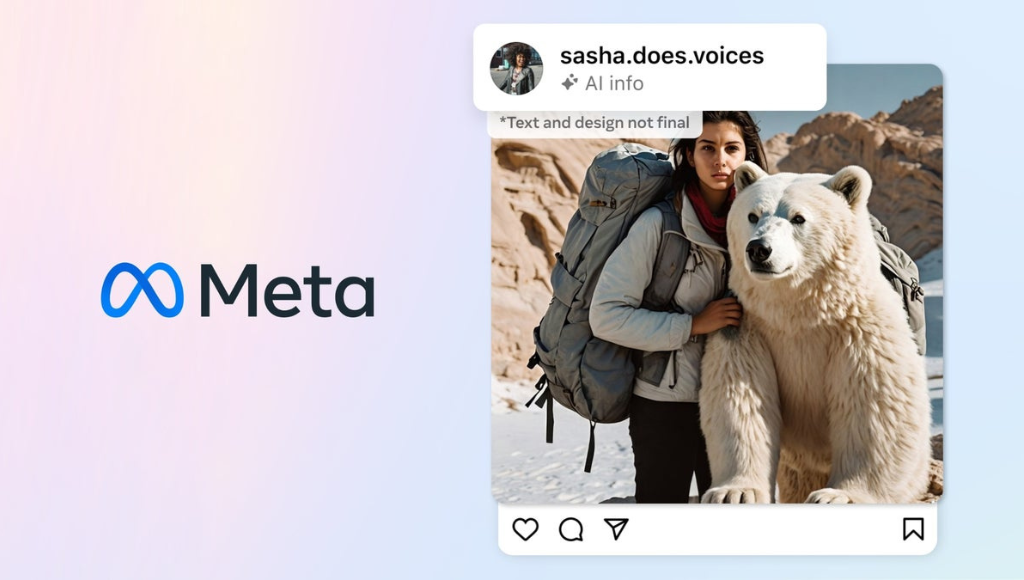

Meta, the parent company of Facebook and Instagram, has unveiled a new initiative aimed at identifying and labeling AI-generated content across its platforms. This move comes in response to growing concerns about the proliferation of deepfake images and videos, particularly after recent incidents involving AI-generated content of public figures like Taylor Swift. In this blog post, we’ll explore Meta’s latest announcement, its efforts to combat AI-generated content, and the implications for online safety and transparency.

Meta’s Announcement: Tackling AI-Generated Content

Addressing Growing Concerns

In a recent post, Meta announced its commitment to identifying and labeling AI-generated content on Facebook, Instagram, and Threads. This decision follows public outrage and calls for action after the circulation of pornographic deepfakes featuring Taylor Swift on social media platforms. As the 2024 US elections approach, Meta faces mounting pressure to address the spread of misleading and manipulated content.

Collaboration and Best Practices

Meta emphasized its collaboration with industry organizations like the Partnership on AI (PAI) to establish common standards for identifying AI-generated content. The company’s use of invisible markers, such as IPTC metadata and invisible watermarks, aligns with PAI’s best practices. These markers allow Meta to label images created using its AI technology, providing users with transparency about the content’s origin.

Challenges and Future Directions

The Limitations of Watermarking

Despite efforts to implement digital watermarks, experts caution that they are not foolproof against manipulation. Recent research has shown that bad actors can evade or even manipulate watermarks, raising questions about their effectiveness in combating AI-generated content. However, digital watermarks play a crucial role in enabling transparency and accountability in the digital landscape.

Ethical Considerations and Provenance

Margaret Mitchell, chief ethics scientist at Hugging Face, underscores the importance of provenance in AI-generated content. While digital watermarks may not entirely eliminate the risk of misuse, they provide valuable insights into the content’s lineage and evolution. Mitchell emphasizes the need for a nuanced approach to AI ethics, balancing innovation with safeguards to protect users’ rights and interests.

Conclusion

Meta’s initiative to identify and label AI-generated content marks a significant step towards enhancing transparency and accountability in social media. By collaborating with industry partners and adopting best practices, Meta aims to mitigate the risks associated with the proliferation of deepfake content. While challenges remain, including the limitations of watermarking technology, ongoing efforts underscore the importance of ethical AI development and responsible digital citizenship.

As we navigate the evolving landscape of online content, initiatives like Meta’s serve as a reminder of the collective responsibility to uphold integrity and trust in digital interactions. By prioritizing transparency and ethical practices, we can foster a safer and more inclusive online environment for all users.