Alluring and useful they may be, but the AI interfaces’ potential as gateways for fraud and intrusive data gathering is huge – and is only set to grow.

Concerns about the growing abilities of chatbots trained on large language models, such as OpenAI’s GPT-4, Google’s Bard and Microsoft’s Bing Chat, are making headlines. Experts warn of their ability to spread misinformation on a monumental scale, as well as the existential risk their development may pose to humanity. As if this isn’t worrying enough, a third area of concern has opened up – illustrated by Italy’s recent ban of ChatGPT on privacy grounds.

The Italian data regulator has voiced concerns over the model used by ChatGPT owner OpenAI and announced it would investigate whether the firm had broken strict European data protection laws.

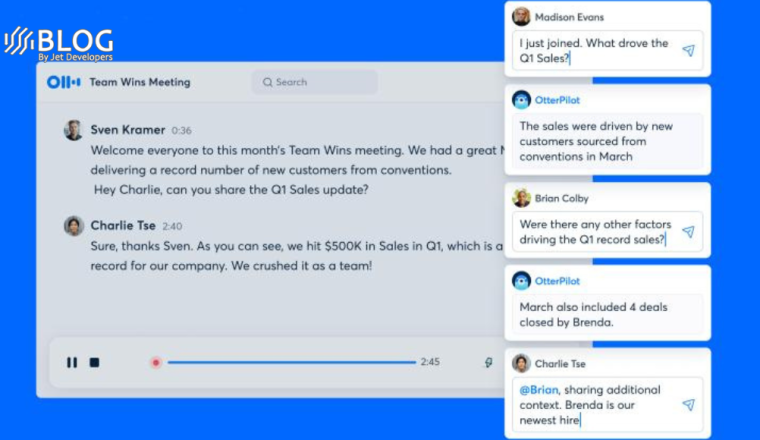

Chatbots can be useful for work and personal tasks, but they collect vast amounts of data. AI also poses multiple security risks, including the ability to help criminals perform more convincing and effective cyber-attacks.

Are Chatbots a larger privacy concern than search engines?

Most people are aware of the privacy risks posed by search engines such as Google, but experts think chatbots could be even more data-hungry. Their conversational nature can catch people off guard and encourage them to give away more information than they would have entered into a search engine. “The human-like style can be disarming to users,” warns Ali Vaziri, a legal director in the data and privacy team at law firm Lewis Silkin.

Each time you ask an AI chatbot for help, micro-calculations feed the algorithm to profile individuals

Chatbots typically collect text, voice and device information as well as data that can reveal your location, such as your IP address. Like search engines, chatbots gather data such as social media activity, which can be linked to your email address and phone number, says Dr Lucian Tipi, associate dean at Birmingham City University. “As data processing gets better, so does the need for more information and anything from the web becomes fair game.”

While the firms behind the chatbots say your data is required to help improve services, it can also be used for targeted advertising. Each time you ask an AI chatbot for help, micro-calculations feed the algorithm to profile individuals, says Jake Moore, global cybersecurity adviser at the software firm ESET. “These identifiers are analysed and could be used to target us with adverts.”

This is already starting to happen. Microsoft has announced that it is exploring the idea of bringing ads to Bing Chat. It also recently emerged that Microsoft staff can read users’ chatbot conversations and the US company has updated its privacy policy to reflect this.

ChatGPT’s privacy policy “does not appear to open the door for commercial exploitation of personal data”, says Ron Moscona, a partner at the law firm Dorsey & Whitney. The policy “promises to protect people’s data” and not to share it with third parties, he says.

However, while Google also pledges not to share information with third parties, the tech firm’s wider privacy policy allows it to use data for serving targeted advertising to users.

How can you use chatbots privately and securely?

It’s difficult to use chatbots privately and securely, but there are ways to limit the amount of data they collect. It’s a good idea, for instance, to use a VPN such as ExpressVPN or NordVPN to mask your IP address.

At this stage, the technology is too new and unrefined to be sure it is private and secure, says Will Richmond-Coggan, a data, privacy and AI specialist at the law firm Freeths. He says “considerable care” should be taken before sharing any data – especially if the information is sensitive or business-related.

The nature of a chatbot means that it will always reveal information about the user, regardless of how the service is used, says Moscona. “Even if you use a chatbot through an anonymous account or a VPN, the content you provide over time could reveal enough information to be identified or tracked down.”

But the tech firms championing their chatbot products say you can use them safely. Microsoft says its Bing Chat is “thoughtful about how it uses your data” to provide a good experience and “retain the policies and protections from traditional search in Bing”.

Microsoft protects privacy through technology such as encryption and only stores and retains information for as long as is necessary. Microsoft also offers control over your search data via the Microsoft privacy dashboard.

ChatGPT creator OpenAI says it has trained the model to refuse inappropriate requests. “We use our moderation tools to warn or block certain types of unsafe and sensitive content,” a spokesperson adds.

What about using chatbots to help with work tasks?

Chatbots can be useful at work, but experts advise you proceed with caution to avoid sharing too much and falling foul of regulations such as the EU update to general data protection regulation (GDPR). It is with this in mind that companies including JP Morgan and Amazon have banned or restricted staff use of ChatGPT.

The risk is so big that the developers themselves advise against their use. “We are not able to delete specific prompts from your history,” ChatGPT’s FAQs state. “Please don’t share any sensitive information in your conversations.”

Generating emails in various languages will be simple. Telltale signs of fraud such as bad grammar will be less obvious

Using free chatbot tools for business purposes “may be unwise”, says Moscona. “The free version of ChatGPT does not give clear and unambiguous guarantees as to how it will protect the security of chats, or the confidentiality of the input and output generated by the chatbot. Although the terms of use acknowledge the user’s ownership and the privacy policy promises to protect personal information, they are vague about information security.”

Microsoft says Bing can help with work tasks but “we would not recommend feeding company confidential information into any consumer service”.

If you have to use one, experts advise caution. “Follow your company’s security policies, and never share sensitive or confidential information,” says Nik Nicholas, CEO of data consultancy firm Covelent.

Microsoft offers a product called Copilot for business use, which takes on the company’s more stringent security, compliance and privacy policies for its enterprise product Microsoft 365.

How can I spot malware, emails or other malicious content generated by bad actors or AI?

As chatbots become embedded in the internet and social media, the chances of becoming a victim of malware or malicious emails will increase. The UK’s National Cyber Security Centre (NCSC) has warned about the risks of AI chatbots, saying the technology that powers them could be used in cyber-attacks.

Experts say ChatGPT and its competitors have the potential to enable bad actors to construct more sophisticated phishing email operations. For instance, generating emails in various languages will be simple – so telltale signs of fraudulent messages such as bad grammar and spelling will be less obvious.

With this in mind, experts advise more vigilance than ever over clicking on links or downloading attachments from unknown sources. As usual, Nicholas advises, use security software and keep it updated to protect against malware.

The language may be impeccable, but chatbot content can often contain factual errors or out-of-date information – and this could be a sign of a non-human sender. It can also have a bland, formulaic writing style – but this may aid rather than hinder the bad actor bot when it comes to passing as official communication.

AI-enabled services are rapidly emerging and as they develop, the risks are going to get worse. Experts say the likes of ChatGPT can be used to help cybercriminals write malware, and there are concerns about sensitive information being entered into chat enabled services being leaked on the internet. Other forms of generative AI – AI able to produce content such as voice, text or images – could offer criminals the chance to create more realistic so-called deepfake videos by mimicking a bank employee asking for a password, for example.

Ironically, it’s humans who are better at spotting these types of AI-enabled threats. “The best guard against malware and bad actor AI is your own vigilance,” says Richmond-Coggan.