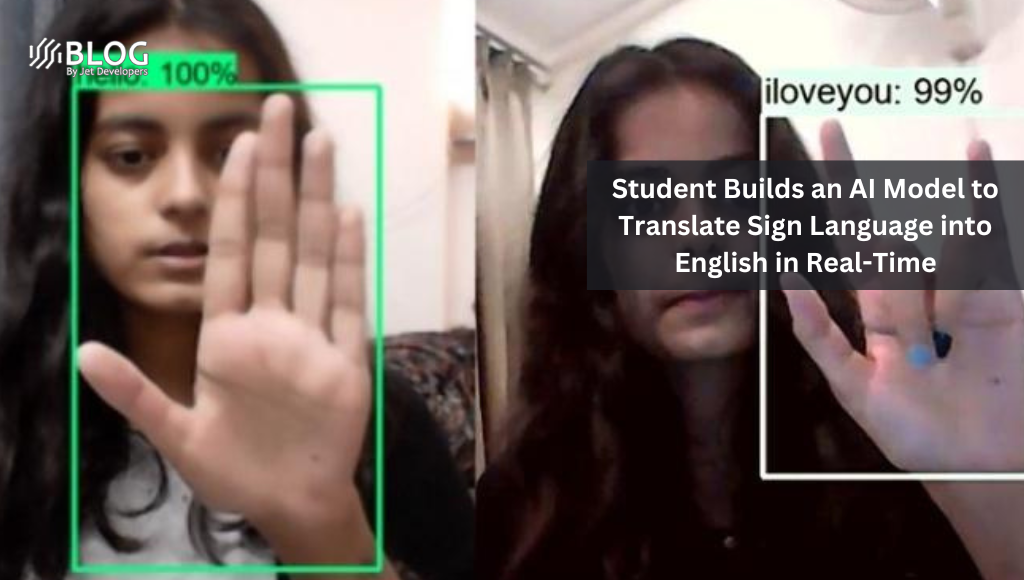

Artificial Intelligence (AI) has been used to develop various kinds of translation models to improve communication amongst users and break language barriers across regions. Companies like Google and Facebook use AI to develop advanced translation models for their services. Now, a third-year engineering student from India has created an AI model that can detect American Sign Language (ASL) and translate them into English in real-time.

Indian Student Develops AI-based ASL Detector

Priyanjali Gupta, a student at the Vellore Institute of Technology (VIT), shared a video on her LinkedIn profile, showcasing a demo of the AI-based ASL Detector in action. Although the AI model can detect and translate sign languages into English in real-time, it supports only a few words and phrases at the moment. These include Hello, Please, Thanks, I Love You, Yes, and No.

Gupta created the model by leveraging Tensorflow object detection API and using transfer learning through a pre-trained model called ssd_mobilenet. That means she was able to repurpose existing codes to fit her ASL Detector model. Moreover, it is worth mentioning that the AI model does not actually translate ASL to English. Instead, it identifies an object, in this case, the signs, and then determines how similar it is based on pre-programmed objects in its database.

In an interview with Interesting Engineering, Gupta noted that her biggest inspiration for creating such an AI model is her mother nagging her “to do something” after joining her engineering course in VIT. “She taunted me. But it made me contemplate what I could do with my knowledge and skillset. One fine day, amid conversations with Alexa, the idea of inclusive technology struck me. That triggered a set of plans,” she told the publication.

Gupta also credited YouTuber and data scientist Nicholas Renotte’s video from 2020, which details the development of an AI-based ASL Detector, in her statement.

Although Gupta’s post on LinkedIn garnered numerous positive responses and appreciation from the community, an AI-vision engineer pointed out that the transfer learning method used in her model is “trained by other experts” and it is the “easiest thing to do in AI.” Gupta acknowledged the statement and wrote that building “a deep learning model solely for sign detection is a really hard problem but not impossible.”

“Currently I’m just an amateur student but I am learning and I believe sooner or later our open-source community, which is much more experienced and learned than me, will find a solution and maybe we can have deep learning models solely for sign languages,” she further added.

You can check out Priyanjali’s GitHub page to know more about the AI model and access the relevant resources of the project. Also, let us know your thoughts about Gupta’s ASL Detector in the comments below.