Today, SambaNova Systems, headquartered in Palo Alto, made a groundbreaking announcement with the introduction of their cutting-edge AI chip, the SN40L. This chip is set to power their comprehensive Large Language Model (LLM) platform known as the SambaNova Suite, designed to assist enterprises in seamlessly transitioning from chip to model, enabling them to build and deploy tailored generative AI models.

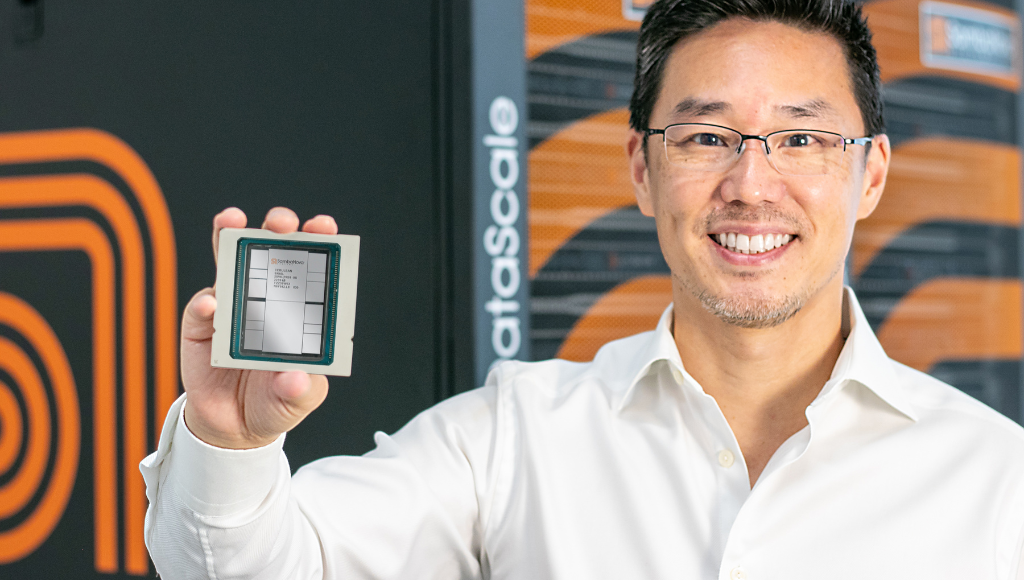

Rodrigo Liang, the co-founder and CEO of SambaNova Systems, shared insights with VentureBeat, emphasizing that SambaNova goes above and beyond Nvidia in terms of offering a holistic approach to model training for enterprises.

“Many individuals were captivated by our infrastructure capabilities, but they faced a common challenge—lack of expertise. As a result, they often outsourced model development to other companies like OpenAI,” explained Liang.

Recognizing this gap, SambaNova embarked on what can be likened to a “Linux” moment for AI. In line with this philosophy, the company not only provides pre-trained foundational models but also offers a meticulously curated collection of open-source generative AI models optimized for enterprise use, whether on-premises or in the cloud.

Liang elaborated on their approach: “We take the base model and handle all the fine-tuning required for enterprise applications, including hardware optimization. Most customers prefer not to grapple with hardware intricacies. They don’t want to be in the business of sourcing GPUs or configuring GPU structures.”

Importantly, SambaNova’s commitment to excellence extends well beyond chip development, as Liang firmly asserts, “When it comes to chips, pound for pound, we outperform Nvidia.”

According to a press release, SambaNova’s SN40L is capable of serving a staggering 5 trillion parameter model, with the potential for sequence lengths exceeding 256k on a single system node. This achievement translates into superior model quality, faster inference and training times, all while reducing the total cost of ownership. Moreover, the chip’s expanded memory capabilities unlock the potential for true multimodal applications within Large Language Models (LLMs), empowering companies to effortlessly search, analyze, and generate data across various modalities.

Furthermore, SambaNova Systems has unveiled several additional enhancements and innovations within the SambaNova Suite:

- Llama2 Variants (7B, 70B): These state-of-the-art open-source language models empower customers to adapt, expand, and deploy the finest LLM models available, all while maintaining ownership of these models.

- BLOOM 176B: Representing the most accurate multilingual foundation model in the open-source realm, BLOOM 176B enables customers to tackle a wider array of challenges across diverse languages, with the flexibility to extend the model to support low-resource languages.

- New Embeddings Model: This model facilitates vector-based retrieval augmented generation, enabling customers to embed documents into vector representations. During the question and answer process, these embeddings can be retrieved without triggering hallucinations. Subsequently, the LLM processes the results for analysis, extraction, or summarization.

- Automated Speech Recognition Model: SambaNova Systems introduces a world-leading automated speech recognition model, capable of transcribing and analyzing voice data.

- Additional Multi-Modal and Long Sequence Length Capabilities: The company also unveils a host of enhancements, including inference-optimized systems with 3-tier Dataflow memory, ensuring uncompromised high bandwidth and capacity.

With the launch of the SN40L chip and the continuous evolution of the SambaNova Suite, SambaNova Systems is positioned to revolutionize the AI landscape, making it more accessible and practical for enterprises, while simultaneously setting new standards in AI chip performance.