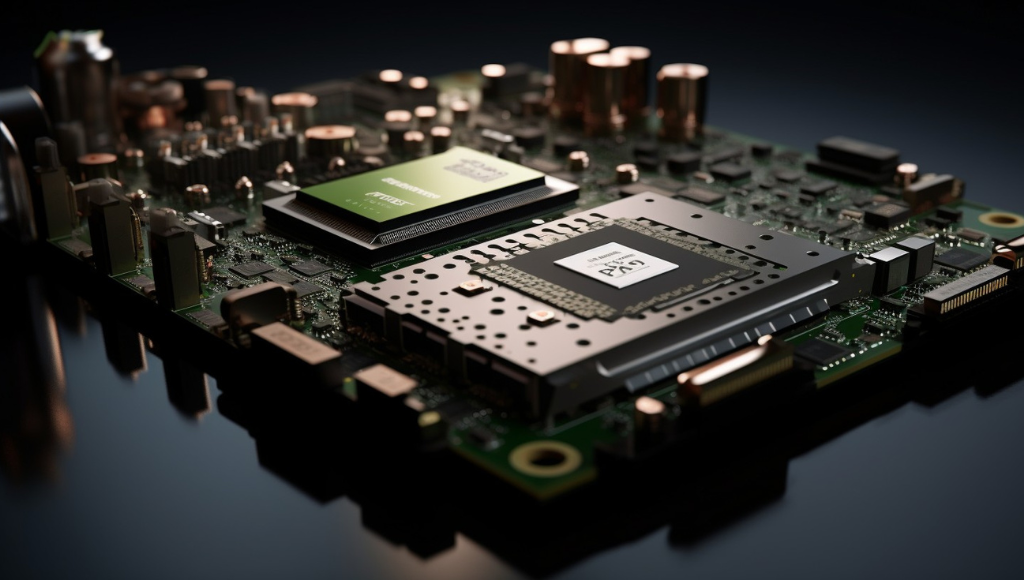

NVIDIA has introduced its latest graphics processing unit (GPU), the H200, showcasing a significant leap in performance compared to its predecessor, the H100 standalone accelerator. Engineered on the Hopper architecture, the H200 is designed to propel high-performance computing (HPC) and support the burgeoning field of generative artificial intelligence.

Notable Advancements in Memory Performance

One of the standout improvements in the H200 is the adoption of HBM3E memory, replacing HBM3. This upgrade boasts a 25% increase in frequency and is referred to as the “AI superchip.” The pivotal enhancement lies in its 141 GB memory, delivering a remarkable 4.8 terabytes per second. This upgrade substantially boosts the performance for tasks such as text and image generation, predictions, offering almost double the capacity and 2.4 times more bandwidth compared to its forerunner, the A100.

Ian Buck, NVIDIA’s Vice President of Hyperscale and HPC, highlighted the significance of efficiently processing vast amounts of data at high speeds for generative AI and HPC applications. He emphasized that with the introduction of the NVIDIA H200, the leading AI supercomputing platform has accelerated, poised to address some of the world’s most pressing challenges.

Compatibility and Integration

NVIDIA also revealed that the HGX H200 seamlessly integrates with HGX H100 systems, allowing for interchangeability between the H200 and H100 chips. Moreover, the H200 is a key component of the NVIDIA GH200 Grace Hopper Superchip, which was previously unveiled in August.

Ian Buck further emphasized the performance gains, noting that the H100 outperforms the A100 by a factor of 11 on GPT-3 Inference, while the H200 exhibits an impressive 18 times performance increase on the same GPT-3 benchmark.

Teasing the Future: B100 GPU

During the presentation, NVIDIA provided a glimpse of its next-generation GPU, the B100, hinting at even more advanced performance capabilities. Although specific details were not disclosed, it is suggested that the B100 might be unveiled by the end of the current year.

Anticipated Availability

The H200 is expected to be available for shipping in the second quarter of 2024. This versatile GPU can be deployed in various data center environments, including on-premises, in the cloud, in hybrid cloud configurations, and at the network edge. NVIDIA’s network of partner companies, including ASRock Rack, ASUS, Dell Technologies, among others, enables the seamless integration of the H200 into existing systems, providing flexibility in deployment.

Wide Adoption in the Cloud

Major cloud service providers such as Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure have plans to incorporate the H200 into their services, incorporating servers and computing resources powered by this technology. Notably, companies like CoreWeave, Lambda, and Vultr are early adopters, integrating H200-based instances into their respective cloud offerings.

NVIDIA’s Strong Position in the Market

NVIDIA, having achieved a trillion-dollar valuation in May, continues to ride the wave of the AI boom. The company’s robust financial performance, with a second-quarter revenue of $13.5 billion in 2023, underscores its prominent position in the market.