Nvidia’s Explosive Q2 Performance Validates Generative AI Growth

Nvidia, the US-based semiconductor giant, made a significant impact yesterday with its outstanding performance in the second quarter of the fiscal year 2023-24. The company’s revenue for this quarter reached an impressive $13.51 billion, marking an astonishing 88 percent surge from the previous quarter and a remarkable 101 percent increase compared to the same period last year.

This remarkable growth exhibited by Nvidia serves as undeniable proof that the movement towards generative artificial intelligence and large language models is not merely a trend but a tangible reality. As a major player in the field, Nvidia stands as a powerhouse in producing the cutting-edge chip technology that drives generative AI.

Jensen Huang, the CEO of Nvidia who established the company in 1993, expressed, “We are witnessing the dawn of a new computing era.”

Following the announcement, Nvidia’s stock price soared by 6 percent, reflecting the market’s enthusiastic response. The company has also seen an extraordinary year-on-year surge of 422 percent in its net income, reinforcing its status as a trillion-dollar valuation enterprise. Looking forward, Nvidia anticipates a revenue of $16 billion for the upcoming fiscal year, with a potential margin of error of 2 percent.

Nvidia is poised for substantial growth in the forthcoming year, with the company’s optimistic outlook stemming from a clear increase in demand. To accommodate this rising demand, Nvidia has proactively secured an expanded supply.

The past year has undoubtedly been a momentous one for Nvidia. The launch of the GH200 Grace Hopper Superchip designed for intricate AI workloads, alongside the introduction of the Nvidia L40S GPU aimed at accelerating complex applications, underscored the company’s commitment to innovation. Notably, the upcoming GH200 Superchips, slated for release next year, will feature the same GPU as the highly sought-after H100 AI chip but with triple the memory capacity.

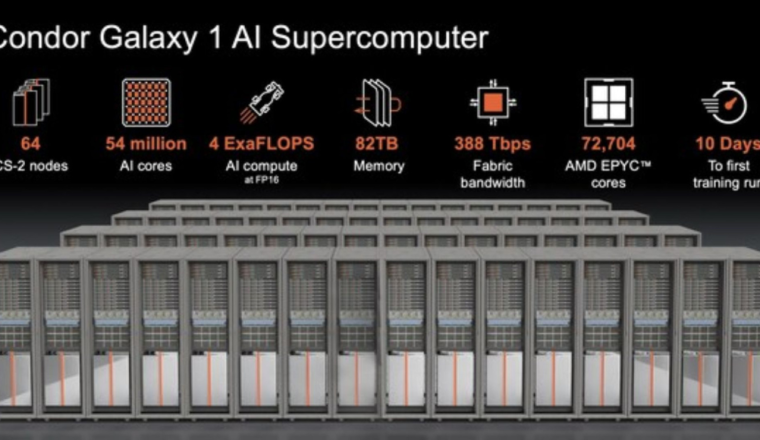

Huang further emphasized, “Throughout the quarter, major cloud service providers unveiled extensive NVIDIA H100 AI infrastructures. Prominent enterprise IT systems and software providers also formed partnerships to integrate NVIDIA AI across various industries. The race to adopt generative AI is in full swing.”

Nvidia’s chips have revolutionized graphics through AI, ushering in an entirely new realm of experience for gamers. The gaming sector has contributed significantly to Nvidia’s revenue, with a substantial $2.49 billion generated, marking an 11 percent increase from the first quarter and a notable 22 percent rise from the previous year.

Achieving a valuation exceeding a trillion dollars in May placed Nvidia among the elite group of US companies in this category. Investors have gravitated towards Nvidia, recognizing it as a major beneficiary of the AI surge. Even OpenAI, the catalyst behind the generative AI boom with its chatbot, utilized Nvidia’s H100 Hopper chips for training and running its GPT model.

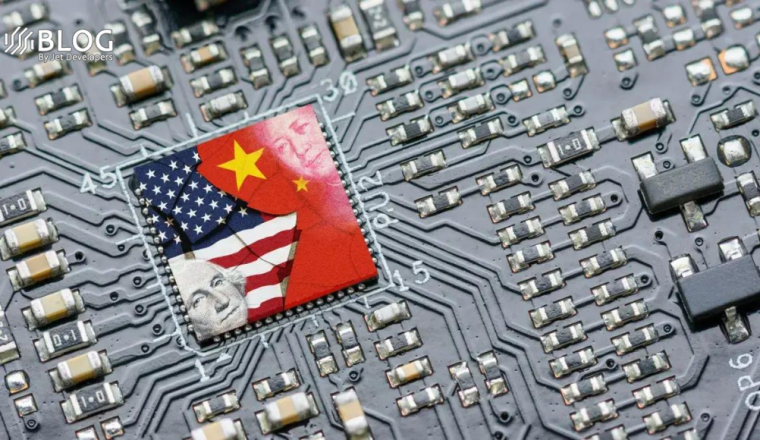

Nvidia has emerged as a strong competitor to prominent GPU manufacturers based in China, Taiwan, and Hong Kong – key players in the industry. The US-imposed export regulations from October of the preceding year have placed Chinese firms at a disadvantage, as they are now restricted from purchasing US-manufactured chips. Simultaneously, the US government has invested substantial funds as incentives for the domestic chip sector.

Consequently, Nvidia’s strategic exports, such as the A800 processor to China, have inadvertently contributed to a dominant GPU market position. Huang affirmed, “Companies worldwide are making the shift from general-purpose computing to accelerated computing and generative AI.”

Nevertheless, China remains determined not to lag behind in the AI race. According to a Financial Times report, major Chinese entities like Baidu, ByteDance, Tencent, and Alibaba have placed significant orders with Nvidia, encompassing about 100,000 A800 processors and GPUs. The combined billing for these orders stands between $1 billion and $4 billion, showcasing China’s fervent pursuit of AI technology.