Adopting Cloud Smart: The New Era in IT Architecture

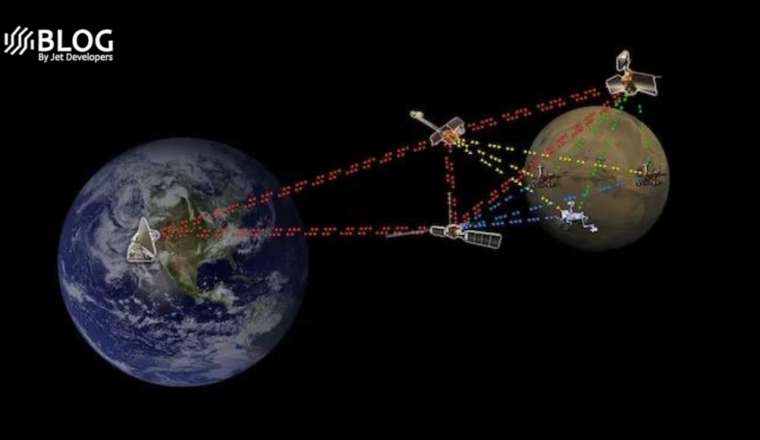

The era of “Cloud First” has evolved, giving way to a more nuanced approach known as Cloud Smart. In this shifting landscape of IT architecture, hybrid cloud, with a mix of on-premises and off-premises solutions, has become the default choice. It’s not merely a transitional phase en route to “cloud maturity” but rather a preferred state for many IT leaders and organizations.

Hybrid cloud’s appeal lies in its flexibility, enabling organizations to leverage existing data center infrastructure while harnessing the advantages of the cloud. This approach optimizes costs and extends on-premises IT capabilities, making it an attractive and sustainable solution.

Moreover, hybrid cloud is gaining popularity among predominantly on-premises organizations eager to tap into the latest cloud technologies. As businesses increasingly rely on advanced technologies like AI for faster and more efficient data processing and analysis, the cloud offers a scalable and cost-effective solution without the need for significant hardware investments, all while addressing cybersecurity concerns.

However, navigating this transition requires careful planning. Rushing into the cloud can lead to hasty decisions that result in negative returns on investment. Some organizations make the mistake of migrating the wrong workloads to the cloud, necessitating a costly backtrack.

In addition to financial setbacks, organizations that fail to adopt a well-thought-out cloud strategy find themselves unable to keep pace with the exponential growth of data. Rather than enhancing efficiency and productivity, they risk falling behind their competitors and missing out on the potential benefits of a successful cloud migration.

One common pitfall is the failure to involve infrastructure teams in the migration process, leading to a disjointed solution that hampers performance. Cloud projects are often spearheaded by software architects who may overlook the critical infrastructure aspects that impact performance. It’s crucial to strike the right balance by aligning infrastructure and software architecture teams, fostering better communication to optimize hybrid cloud deployments.

The urgency to address these challenges is pressing, given the increasing demand for hybrid cloud solutions. Over three-quarters of enterprises now use multiple cloud providers, with one-third having more than half of their workloads in the cloud. Moreover, both on-premises and public cloud investments are expected to grow, with substantial spending on public cloud services projected by Gartner.

The Growing Demand for Hybrid Cloud

Hybrid cloud empowers organizations to harness the advantages of both public and private clouds, providing flexibility in hosting workloads. This flexibility optimizes resource allocation and enhances cloud infrastructure performance, contributing to cost savings.

Furthermore, hybrid cloud allows organizations to leverage the security benefits of both public and private clouds, offering greater control and advanced security approaches as needed. Many organizations also turn to hybrid cloud to rein in escalating monthly public cloud bills, especially when dealing with cloud sprawl and storage costs.

The “pay as you go” model is a boon, provided organizations understand how to manage it effectively, particularly in the case of long-lived and steadily growing storage needs.

In conclusion, “Cloud First” is giving way to “Cloud Smart.” This shift acknowledges the importance of optimizing the on-premises and cloud-based IT infrastructure. A “Cloud Smart” architectural approach empowers enterprises to design adaptable, resilient solutions that align with their evolving business needs. Striking the right balance between on-premises and cloud solutions ensures optimal performance, reliability, and cost-efficiency, ultimately driving better long-term outcomes for organizations.